Creating and manipulating ticking tables is the bread and butter of the Deephaven experience. The wide array of table operations offered by the Python API enables real-time data manipulation and analysis at scale. So, it needs to be really easy to create a Deephaven table, and we need to be able to create one from many different kinds of data sources. With the introduction of function-generated tables, this has never been simpler.

Function-generated tables allow you to write arbitrary Python functions for retrieving and cleaning data and use the results to populate a ticking table. The function is then re-evaluated at regular time intervals, and updated data from the function flows seamlessly into your table.

This blog will explore function-generated tables, culminating with a cool example that uses tomorrow.io‘s free weather API. Let’s jump in!

A function-generated table is as simple as it sounds – it takes the output of a Python function, and stores it in a table. That function can then be re-evaluated at regular intervals to produce snapshots of real-time data that flow into your table without any manual intervention. The result is a single ticking table that updates any time the function gets re-evaluated.

In this simple example, we’ll write a Python function that returns a new Deephaven table. Then, that function will power a function-generated table to hold the data produced by that function call.

from deephaven import empty_table, function_generated_table

def create_new_table():

return(empty_table(5).update(["X = randomDouble(0.0, 1.0)"]))

generated_table = function_generated_table(create_new_table, refresh_interval_ms = 500)

We’ve set the function-generated table to re-evaluate the table-generating function every half of a second, so the resulting generated_table will update twice per second with the new values produced by create_new_table(). The table-generating function must return a Deephaven table, and the table schema cannot change between function calls. You can also pass arbitrary positional and keyword arguments to the table-generating function with Python’s args and kwargs. See our documentation for more detailed information on function-generated tables.

The real utility of function-generated tables comes from defining a single endpoint for the data they generate, and then treating that endpoint as its own table, with all of the functionality and power that comes with it.

In the above example, to achieve the same effect as a function-generated table, you would have to write a script that manually redefines the new table every 5 seconds. Any queries built off of that would not inherit changes to its data, as each new table would live in a new place in memory, and have no knowledge of its child tables. Function-generated tables solve that problem by providing a singular endpoint to the table-creation process, so that regardless of how the changes in data come about, they always end up in the same singular ticking table, and anything built from that table will inherit all of its updates seamlessly.

Now that we’ve seen a straightforward usage example, let’s create something more interesting using a real-world endpoint to fetch data.

For this example, you’ll need an API key from tomorrow.io. Don’t worry, they’re free, and the API that you get access to is highly functional. If you’re a weather data enthusiast, I’d highly recommend checking them out.

To start, we’ll define some Python functions to retrieve and clean data from the API. First, import the necessary libraries, set up a global variable for our API key, and write a function to perform the API call at a given location and retrieve the data in JSON format.

Be sure to set an environment variable called API_KEY to your own API key from tomorrow.io. Otherwise, none of the subsequent code will run.

import os

os.system("pip install requests")

import requests

import json

API_KEY = os.environ["API_KEY"]

def forecast_from_location(location):

json_response = requests.get(f'https://api.tomorrow.io/v4/weather/forecast?location={location}&apikey={API_KEY}').json()

minutely_forecast = json_response['timelines']['minutely']

return(minutely_forecast)

This will give us an extensive list of dictionaries in JSON format, containing a minute-by-minute weather forecast over the next hour at the given location.

Now, we need to use that data to populate a Deephaven table. That requires a bit of reformatting, as Deephaven tables cannot be directly instantiated from JSON-formatted data.

from deephaven import new_table

from deephaven.column import datetime_col, float_col

def table_from_forecast(forecast):

unpacked_forecast = [{'time': this_dict['time'], **this_dict['values']} for this_dict in forecast]

forecast_dict = {k: [dic[k] for dic in unpacked_forecast] for k in unpacked_forecast[0]}

timestamp = forecast_dict['time']

del forecast_dict['time']

table = new_table([

datetime_col("timestamp", timestamp),

*[float_col(key, forecast_dict[key]) for key in forecast_dict.keys()]

])

return(table)

Now, we have a function that returns a Deephaven table with the latest data from tomorrow.io’s weather API every time it’s called. Sounds like a perfect use case for a function-generated table. We’ll define one final function to tie these two together, and use that to power our function-generated table.

def table_from_location(location):

forecast = forecast_from_location(location)

return(table_from_forecast(forecast))

LOCATION = "30.2672,-97.7431"

forecast_table = function_generated_table(table_generator = table_from_location,

refresh_interval_ms = 5*60*1000, kwargs = {'location': LOCATION})

Here, we create a global variable to hold a latitude-longitude string (I’m in Austin, TX, so I chose the coordinates accordingly), and pass that to the table-generating function with every call. This shows how to use the kwargs argument of function_generated_table to pass arbitrary keyword arguments to the table-generating function. The API that we’re getting the data from refreshes its contents every minute, so we use refresh_internal_ms to re-evaluate the function every 5 minutes, so as to not exceed our API call limit. This means that forecast_table will always hold the most up-to-date (within 5 minutes) predictions from tomorrow.io, and we can perform operations on that table of predictions that will also update in real time. Let’s see some of those table operations in action.

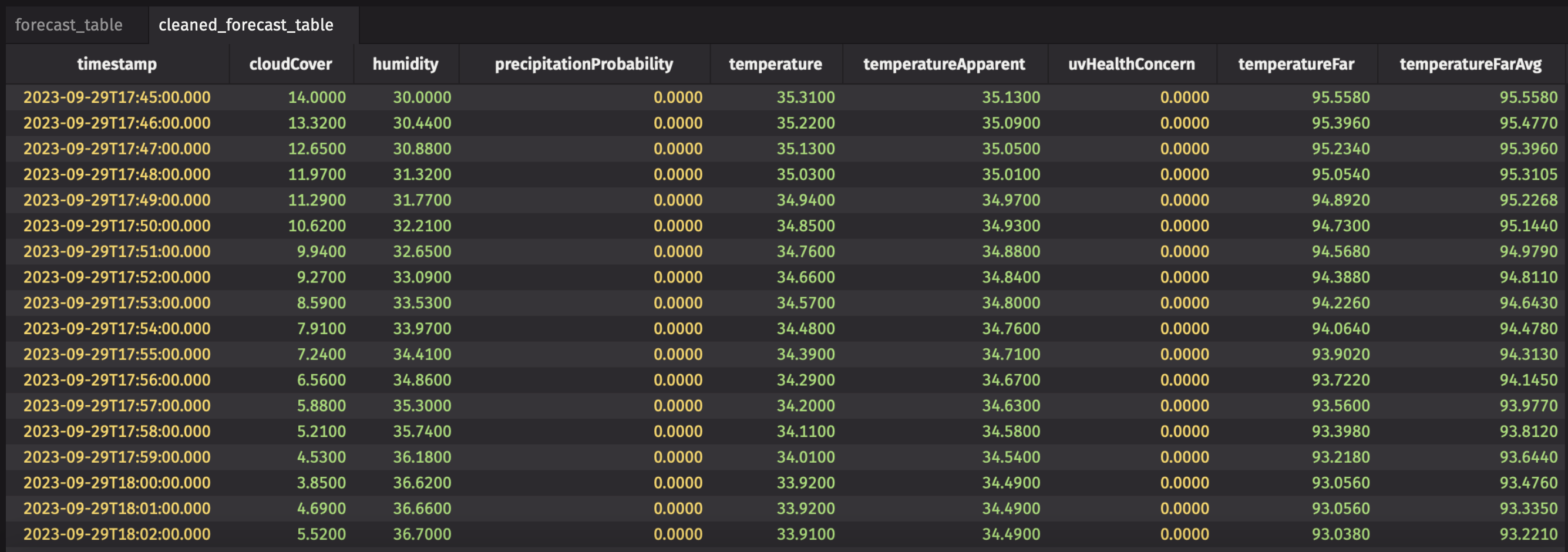

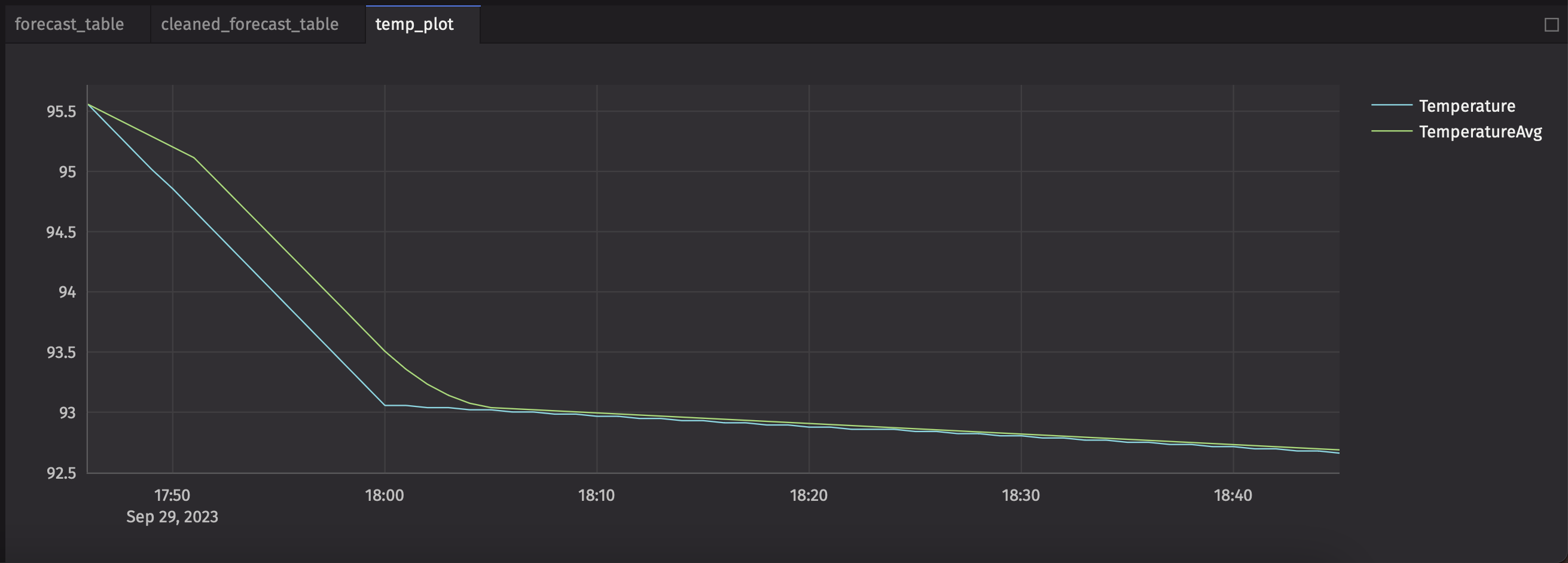

First, we select only the columns of interest. It’s October in Austin, so freezing rain, sleet, and snow are pretty much off the table. Humidity, apparent temperature, and chances of rain are much more interesting down here. Then, we’ll create a new column of predicted temperature values in Fahrenheit (the API gives us temperature in Celcius), and compute the 5-minute rolling average of the predicted temperature using update_by(). Finally, we’ll plot the predicted temperature and the 5-minute rolling average of predicted temperature, so we can visualize in real time just how rough the next hour is going to be.

from deephaven import updateby as uby

from deephaven.plot.figure import Figure

cleaned_forecast_table = forecast_table.

select(["timestamp", "cloudCover", "humidity", "precipitationProbability",

"temperature", "temperature", "temperatureApparent", "uvHealthConcern"]).

update("temperatureFar = 32 + 9/5 * temperature").

update_by(uby.rolling_avg_time("timestamp", "temperatureFarAvg = temperatureFar", "PT00:05:00"))

temp_plot = Figure().plot_xy(series_name="Temperature", t=cleaned_forecast_table, x="timestamp", y="temperatureFar").

plot_xy(series_name="TemperatureAvg", t=cleaned_forecast_table, x="timestamp", y="temperatureFarAvg").

show()

This plot, like everything else in Deephaven, will update when the parent tables update. In this case, that’s every 5 minutes, due to API restrictions.

Function-generated tables are highly flexible and provide an avenue to get real-time data into a Deephaven table through just about any route imaginable. If you have an interesting use case, please reach out to us on our Community Slack channel!

If you’re into weather data, be sure to check out tomorrow.io‘s API, as we only showcased a tiny fraction of the data they provide.

To learn more about Deephaven, please head to our Community documentation for more information. Happy coding!

Source link

lol