This essay explores how cognitive science serves as a blueprint for AI agents, giving us a framework to understand AI developments, pinpoint system gaps, and contrast human and AI minds. We walk through how the key components – perception (data inputs), working memory (context windows), procedural & declarative long-term memory (databases), motor functions (tools), and the orchestrator – all work together.

Understanding our minds is a quest that has been pursued since antiquity. Greek philosopher Aristotle explored internal mental processes such as perception, thinking, and memory, laying the groundwork for cognitive psychology. Meanwhile in the East, Indian philosopher Gotama analyzed the steps involved in perception, influencing future information processing models.

Today, cognitive science and computer science aim not just to understand, but to also recreate the mind from their discpline’s unique perspectives. Cognitive science models natural minds, focusing mainly on understanding the processes that generate human thought. Meanwhile, the field of artificial intelligence (AI), nested within computer science, seeks to build algorithms and systems that mimic intelligent behavior.

For much of AI’s history, developments—though valuable—were somewhat limited relative to the lofty goal of recreating the mind. Traditionally, AI algorithms were task-specific, lacking the flexibility that is second nature to humans. AI engineers were tasked with optimizing accuracy performance for these specific tasks. However, the advent of foundational models shifted this paradigm by providing the first glimpses of general artificial intelligence. Foundation models exhibited the creativity and flexibility unique to human minds. Over the past six months, AI engineers have pivoted from optimizing task-specific models to designing autonomous systems or AI agents.

Cognitive science, on the other hand, has been building holistic models of the mind for decades. There is a rich history from which AI researchers could glean valuable insights. This essay aims to draw parallels between these two fields and highlight the insights, some practical and some intellectual, that can be learned from the comparisons.

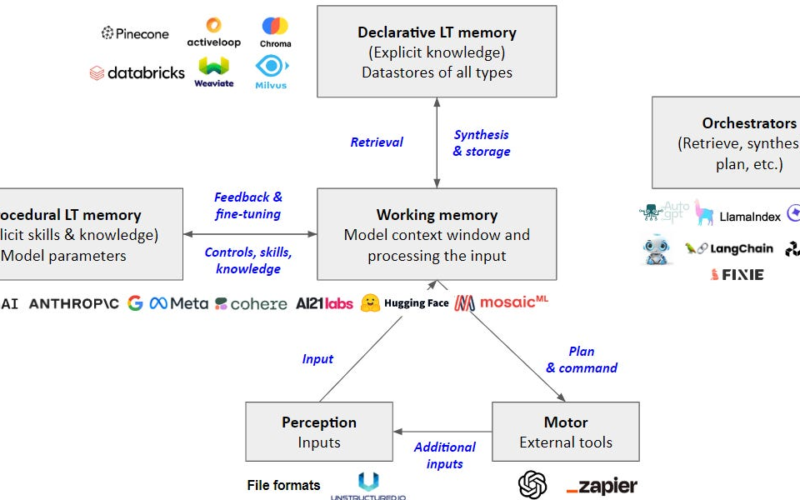

Cognition pertains to the mental processes that our brains utilize to acquire, process, store, and utilize information. It’s analogous to the software that our brain employs to interpret and engage with the world around us. A cognitive architecture is analogous to a software architecture as it outlines the components of a system and how they function. Over the past 40 years, over 80 cognitive architectures have been published in scientific literature. Despite this variety, a broad consensus has emerged around what the standard cognitive model entails. It comprises five primary components: perception, working memory, procedural long-term memory (implicit knowledge), declarative long-term memory (explicit knowledge), and motor functions. As the model of the human mind, it also serves as a blueprint for building AI agents. I’ve added a sixth component, termed the orchestrator. It is not part of the standard model but is a helpful construct to complete the framework. Let’s examine each one individually.

Perception: Perception involves interpreting sensory data we receive from our surroundings. We have five main senses (sight, hearing, taste, smell, and touch), and perception is the process our brains employ to make sense of the information they provide.

In AI, perception corresponds to tasks typically associated with computer vision, natural language processing, and other sensor data processing techniques. Concretely, it is analogous to file formats like .txt, .jpg, .mp3, which serve as digital representations of information that models can comprehend. However, not all data comes in model-readable formats (e.g. pdfs). So we also consider tools like Unstructured.io that convert non-AI-readable formats into AI-readable formats as part of perception.

Working Memory: Often compared to a mental workspace, working memory is the part of our memory that temporarily holds and manipulates information. Consider when you’re performing mental arithmetic or remembering a phone number; you’re utilizing your working memory.

In an AI context, working memory can be thought of as a temporary storage and processing engine for transient data. The context windows of Large Language Models (LLMs) serve a similar purpose. An LLM’s context window contains the input users (or machines) provide, which it processes alongside additional information retrieved from databases.

Procedural Long-term Memory: This is the part of memory responsible for skills and habits. Procedural memories involve tasks like riding a bike, typing on a keyboard, or driving a car. It’s termed “procedural” because it’s primarily about knowing how to perform procedures or actions. These memories are typically unconscious, and we execute them effortlessly.

In AI, procedural memory refers to implicit skills and moral boundaries embedded within the models’ neurons. For example, ChatGPT is trained to respond jovially when making fun about most religions. When it comes to Islam, ChatGPT will refuse to make jokes, provide a reason as to why, and prompt the user to be more considerate.

Declarative Long-term Memory: This memory stores facts and events. It’s divided into two subtypes: semantic and episodic memory. Semantic memory is for general knowledge about the world, like knowing that Paris is the capital of France or that dogs are mammals. Episodic memory, on the other hand, is for personal experiences or events, like remembering your first day at school or what you had for breakfast yesterday.

In AI, this corresponds to databases. There are various ways to implement this because information can be stored in different ways. What database to use depends on the use case. For example, knowledge graphs are an effective way to store facts and the relationships between them. While vector databases like Activeloop, Chroma, Pinecone, and Weaviate are optimized to handle abstract numerical representations (i.e. vectors) of words & images.

Motor: This component of cognition is dedicated to planning and executing physical actions. Whether it’s something complex like playing a musical instrument or something simple like picking up a glass of water, your motor cognition is in operation. It assists you in planning the movement, coordinating your muscles, and making adjustments based on feedback like what you see and feel.

In AI software systems, this refers to abilities that interact with other software systems, such as accessing your file system or drafting an email for you. Accessing external systems is what distinguishes motor skills from procedural skills embedded inside the neural network. The range of skills that an application has depends on the use case. An example of a marketplace of skills are ChatGPT Plug-ins and Zapier’s collection of API integrations.

Orchestrator: The component responsible for managing and coordinating the interactions between all the other components. In the context of AI, this could involve dictating when and how data is retrieved from the databases, how it is fed into the context window, and how it is used to plan an AI’s actions. This could be app frameworks like LlamaIndex and Langchain or no-code orchestrators like Respell and Stack. Some of the processes it coordinates include:

-

Retrieval: The process of bringing information from long-term memory back into working memory. For example, when you’re trying to remember a movie name or a historical fact, you’re using the process of retrieval. In AI, retrieval could be implemented by querying a database or a knowledge graph to pull up relevant information.

-

Encoding: The process of converting information into a form that can be stored in memory. For instance, when you learn a new fact, your brain encodes that information into neural patterns that can be stored in your long-term memory. In AI systems, data is encoded into numerical or symbolic representations that a machine learning model can process.

-

Consolidation: The process by which temporary memories are converted into a more permanent form for long-term storage. While this differs from encoding, in that we only store an imperfect synthesized version of the encoded information that goes through our minds, a similar process occurs in AI. In AI, a similar process occurs during the training or fine-tuning of a model, where neural networks are updated to reflect new knowledge. This also includes the summarization and synthesis of working memories into a database for storage.

-

Planning: A kind of top-level management system in the brain, which includes skills like task-switching and planning. In an AI system, this could be the task management system similar to BabyAGI which approximates a decision-making system that directs the actions of the lower-level processes based on the overall goals.

Let’s walk through a cognitive cycle to understand how it all works together. When you are reading this essay, your eyes see characters (which objectively are arbitrary lines of contrasted colors), These are passed on to the working memory which then is given meaning by our procedural reading skill. The orchestrator & working memory then pulls word & sentence meaning and associated memories from the declarative memory. As you learn, as I hope you would reading this, your declarative memory gets updated with new concepts and associations with past memories. As you read this essay, your mental planner could (hopefully) decide that this is worth reading further so you instruct your hand to continue scrolling.

A more technical illustration of cognitive cycle is the design pattern for the common AI chatbot that retrieves documents to answer questions. Say, you are talking to said chatbot. The input to its system is your question. Your question is encoded into a vector representation (a string of numbers) with an embedding model. A retrieval function compares your search vector with document vectors stored in a database and selects the most relevant documents. These documents are then included in the LLM’s context window as additional input to respond to your question. The LLM then synthesizes the answer or it may decide to execute your intended request (e.g. can you reset my password?) with the skills available to it.

This model is useful for understanding the core parts of an AI agent, leaving out finer details like fine-tuning, setting boundaries, and caching prompts. A simpler, more intuitive framework complementing technical overviews (like this one). Here’s how I’ve used the framework:

Keep up with fast AI developments: For example, Meta and Microsoft just launched Llama-2. Using this model, non-tech people can understand that it’s just another addition to the pool of available Large Language Models (LLMs) to help working and procedural memories. Unless Llama-2 shows a big improvement over current models or works better with other parts, we’re happy with this takeaway. The details of the model, of course, still matter to tech people.

Find bottlenecks and opportunities in developing AI systems: There are many well-funded basic model and database companies. But we’re just starting to see companies focusing on orchestrators, perception, and motor skills. Putting on the venture hat, startups in the these categories are the ones to join or invest in. But of course, deciding which companies are the best requires a deeper look – something for future articles.

Compare the human and artificial mind (my favorite): We are moving towards AI systems that are better than us in most mental abilities. Humans’ working memory is limited in capacity, usually about 7 pieces of information give or take a few. But the context windows of AI models today can hold up to 200,000 words, which can fit a few novels. When it comes to long-term memory, databases can grow infinitely. While scientists haven’t found a limit for our brain’s capacity to store facts, humans need a lot of effort to learn. Plus, software is also more reliable. Our mental abilities depend on our mood, sleep, age, and other seemingly trivial factors (like sunshine).

But humans still have an advantage in orchestration, which is part problem-solving, part creativity, part mystery. This might be the last practical area where the human brain excels. AI systems struggle with getting the right information because of imperfect encoding models and retrieval metrics. Feed the wrong context to the model, and you get a convincing piece of text or computer command that’s wrong. Humans are able to put together information, connect the dots between memories, and get the right context effortlessly.

Curated reads

Source link

lol