Science rests on replicability and reproducibility. Without it, we can’t separate useful insights about the world from artifacts arising due to chance, and even fraud and malpractice by researchers. There are other costs too: one study estimated the cost of irreproducible research at $28 billion every single year, in the field of preclinical research alone.

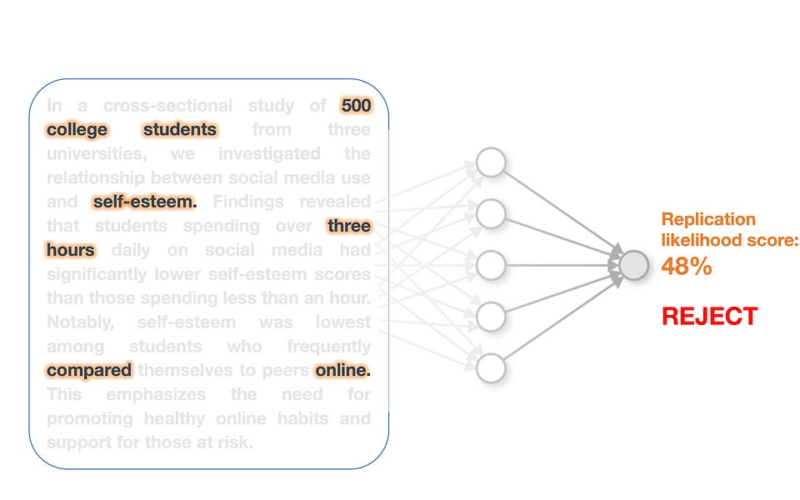

Earlier this year, a paper in the widely read PNAS journal raised the possibility of detecting non-replicable findings using machine learning (ML). The authors claimed ML could be used to predict which studies would replicate, and ultimately be used for deciding which studies should be replicated manually. This follows a 2020 PNAS paper by the same authors, as well as other calls to use ML for evaluating scientific replicability. If ML is indeed useful for detecting non-replicable findings, it would be amazing, because testing replicability is laborious — it usually involves running the study again — and hence rarely done.

In our past work, we have shown how errors affect hundreds of papers in over a dozen fields that have adopted ML methods, so we were skeptical of these claims. In our experience, models that try to predict consequential social outcomes are particularly likely to be flawed. Worse, these flaws are often inherent to the use of ML in these settings, and thus not fixable.

So we decided to dig in—through a collaboration led by our colleague, Princeton psychology professor Molly Crockett. Yale anthropology professor Lisa Messeri and Princeton psychology PhD student Xuechunzi Bai were our other collaborators.

We found that the model developed by the authors has major drawbacks, which we describe in a letter sent to PNAS, published earlier this week. We explain why the use of ML for estimating replicability cannot offer a shortcut to the hard work of building more credible scientific practices and institutions.

The model described in the paper was trained on an extremely small sample: just 388 past papers that had been evaluated for replicability in past efforts (such as from a landmark study by the Open Science Foundation).

The authors used the model to make predictions for over 14,000 papers. But these papers were from journals and subfields drastically different from the ones based on which the model was developed. This is another pitfall: ML methods are prone to large changes in accuracy even due to small changes in the distribution of the training and test data. The authors propose that their model be used for particularly hard-to-replicate studies, such as those conducted over multiple years, or with a hard-to-find sample. But such studies are even more likely to be outliers in the distribution.

The model was trained using correlations between superficial features of papers, like the text, and the outcome of a past replication effort. But the kind of text features used for creating ML models can be easily gamed to avoid triggering the model. This is an example of Goodhart’s law: when a measurement is used for a decision-making process, it ceases to be a good measure. An apocryphal example is the cobra effect. When the colonial British government wanted to reduce the cobra population in India, it started handing out rewards for dead cobras. This led to people breeding cobras to claim rewards—the opposite of the intended effect. Since ML methods are easy to game in this way, this model wouldn’t be useful for long.

This effect would be most prominent if the model is used for making consequential decisions, such as funding allocations. In their 2020 paper, the authors stated that their ML model could be used to support SCORE, an effort by DARPA. In the present paper, the authors were careful not to make an appeal to funding agencies. But in a follow-up interview about the paper, one of the authors claimed the model could be useful for funding agencies.

This is an example of a pattern we’ve seen in other dubious research: the work is motivated by applications that can have harmful side effects, but the paper itself doesn’t make the connection, allowing the authors to deflect criticism, even as they use the publication to legitimize dubious uses.

Another group of authors—Aske Mottelson and Dimosthenis Kontogiorgos—also published their analysis of the original paper. They found that the model relied on the style of language used in papers to judge if a study was replicable. This finding reinforces our points. Since this style is likely to differ significantly across subfields of psychology, a model trained on one subfield is unlikely to be useful for another. And replicability scores can easily be gamed by changing linguistic style.

The authors had a chance to respond to our piece but didn’t engage with the substance of our points. Their reply had other worrying elements. They claimed we “weirdly” cited a Jim Crow law to argue that ML errors could be inequitably distributed. The citation? Princeton professor Ruha Benjamin’s book “Race After Technology: Abolitionist Tools for the New Jim Code”, a foundational book in understanding algorithmic injustice. It appears that the authors didn’t understand even the name of the book, or bother to look it up.

On the whole, the experience of writing this letter was edifying. We don’t think ML for replication is in a state where it is likely to be useful for making important decisions, and we’re skeptical it can ever be, given the low amount of data on replicability that could be used to train such models, the large variability in the type of research being judged, and strong incentives to game any decision-making system that uses ML.

Source link

lol